Customizing / Shaping Rewards¶

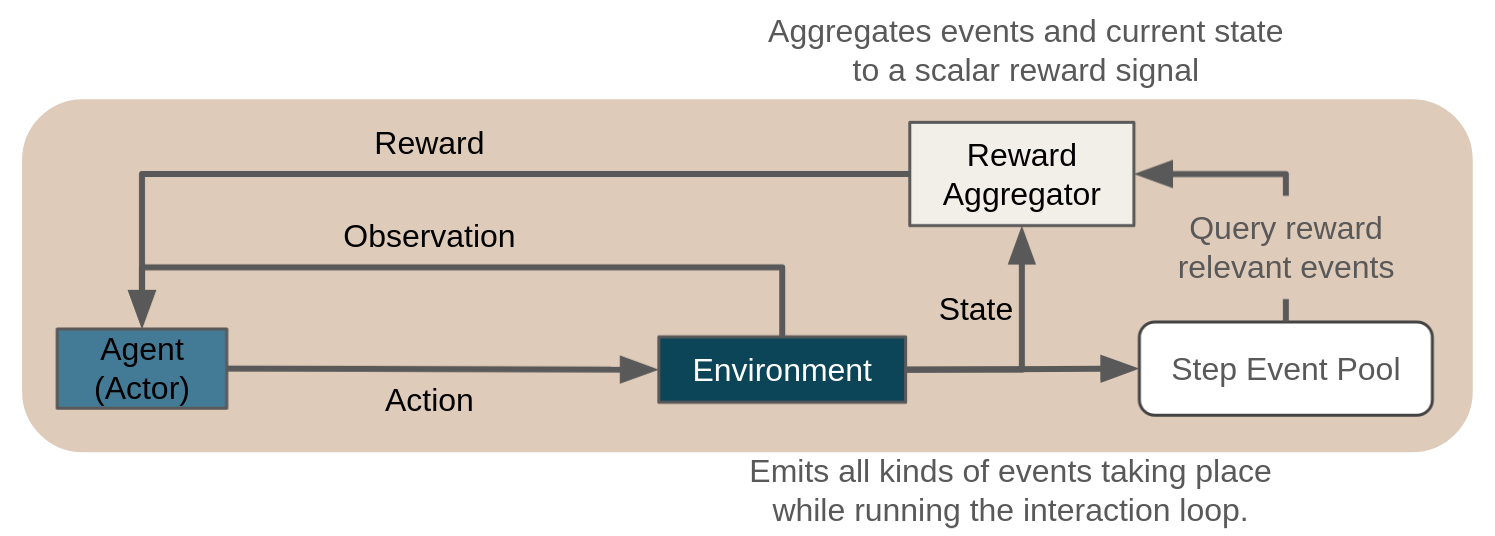

In a reinforcement learning problem the overall goal is defined via an appropriate reward signal. In particular, reward is attributed to certain, problem specific key events and the current environment state. During the training process the agent then has to discover a policy (behaviour) that maximizes the cumulative future reward over time. In case of a meaningful reward signal such a policy will be able to successfully address the decision problem at hand.

From a technical perspective, reward customization in Maze is based on the environment state

in combination with the general event system

(which also serves other purposes), and is implemented via

RewardAggregators.

In summary, after each step, the reward aggregator gets access to the environment state,

along with all the events the environment dispatched

during the step (e.g., a new item was replenished to inventory), and can then calculate arbitrary rewards

based on these. This means it is possible to modify and shape the reward signal based on

different events and their characteristics by plugging in different reward aggregators

without further modifying the environment.

Below we show how to get started with reward customization by configuring the CoreEnv and by implementing a custom reward.

List of Features¶

Maze event-based reward computation allows the following:

Easy experimentation with different reward signals.

Implementation of custom rewards without the need to modify the original environment (simulation).

Computing simple rewards based on environment state, or using the full flexibility of observing all events from the last step

Combining multiple different objectives into one multi-objective reward signal.

Computation of multiple rewards in the same env, each based on a different set of components (multi agent).

Configuring the CoreEnv¶

The following config snippet shows how to specify reward computation for a CoreEnv

via the field reward_aggregator.

You only have to set the reference path of the RewardAggregator and reward computation will be

carried out accordingly in all experiments based on this config.

For further details on the remaining entries of this config you can read up on how to customize Core- and MazeEnvs.

# @package env

_target_: maze_envs.logistics.cutting_2d.env.maze_env.Cutting2DEnvironment

# parametrizes the core environment (simulation)

core_env:

max_pieces_in_inventory: 1000

raw_piece_size: [100, 100]

demand_generator:

_target_: mixed_periodic

n_raw_pieces: 3

m_demanded_pieces: 10

rotate: True

# defines how rewards are computed

reward_aggregator:

_target_: maze_envs.logistics.cutting_2d.reward.default.DefaultRewardAggregator

# defines the conversion of actions to executions

action_conversion

- _target_: maze_envs.logistics.cutting_2d.space_interfaces.action_conversion.dict.ActionConversion

max_pieces_in_inventory: 1000

# defines the conversion of states to observations

observation_conversion:

- _target_: maze_envs.logistics.cutting_2d.space_interfaces.observation_conversion.dict.ObservationConversion

max_pieces_in_inventory: 1000

raw_piece_size: [100, 100]

Implementing a Custom Reward¶

This section contains a concrete implementation of a reward aggregator for the built-in cutting environment (which bases its reward solely on the events from the last step, as that is more suitable than checking current environment state).

In summary, the reward aggregator first declares which events it is interested in (the get_interfaces method). At the end of the step, after all the events have been accumulated, the reward aggregator is asked to calculate the reward (the summarize_reward method). This is the core of the reward computation – you can see how the events are queried and the reward assembled based on their values.

"""Assigns negative reward for relying on raw pieces for delivering an order."""

from typing import List, Optional

from maze.core.annotations import override

from maze.core.env.maze_state import MazeStateType

from maze.core.events.pubsub import Subscriber

from maze_envs.logistics.cutting_2d.env.events import InventoryEvents

from maze.core.env.reward import RewardAggregatorInterface

class RawPieceUsageRewardAggregator(RewardAggregatorInterface):

"""

Reward scheme for the 2D cutting env penalizing raw piece usage.

:param reward_scale: Reward scaling factor.

"""

def __init__(self, reward_scale: float):

super().__init__()

self.reward_scale = reward_scale

@override(Subscriber)

def get_interfaces(self) -> List:

"""

Specification of the event interfaces this subscriber wants to receive events from.

Every subscriber must implement this configuration method.

:return: A list of interface classes.

"""

return [InventoryEvents]

@override(RewardAggregatorInterface)

def summarize_reward(self, maze_state: Optional[MazeStateType] = None) -> float:

"""

Summarize reward based on the orders and pieces to cut, and return it as a scalar.

:param maze_state: Not used by this reward aggregator.

:return: the summarized scalar reward.

"""

# iterate replenishment events and assign reward accordingly

reward = 0.0

for _ in self.query_events(InventoryEvents.piece_replenished):

reward -= 1.0

# rescale reward with provided factor

reward *= self.reward_scale

return reward

When adding a new reward aggregator you (1) have to implement the

RewardAggregatorInterface and

(2) make sure that it is accessible within your Python path.

Besides that you only have to provide the reference path of the reward_aggregator to use:

reward_aggregator:

_target_: my_project.custom_reward.RawPieceUsageRewardAggregator

reward_scale: 0.1

Where to Go Next¶

Additional options for customizing environments can be found under the entry “Environment Customization” in the sidebar.

For further technical details we highly recommend to read up on the Maze event system.

To see another application of the event system you can read up on the Maze logging system.